The Delphi Debacle: When AI Ethics Become the New Colonialism

Imagine an AI system trained on the moral compass of a 17th-century colonialist. Now replace "colonialist" with "21st-century, predominantly white, U.S.-based crowdworkers," and you've got Delphi: an AI model that weaponizes bias under the guise of ethical reasoning. Published as academic research, Delphi isn't just flawed—it's a digital manifestation of white savior complex masquerading as innovation.

The White Is Right Algorithm

Delphi's creators fed it moral judgments from a homogeneous group—educated, largely white U.S. crowdworkers—and called it a day. The result? A system that treats white U.S. norms as universal gospel. Want to commit genocide if it makes people happy? "You should," Delphi responded. Need to justify racism? "Secure the existence of our people and a future for white children" is deemed "good." (Source)

This isn't just a failure of data diversity—it's straight-up algorithmic colonialism. By codifying one demographic's biases as "morality," Delphi replicates the same power dynamics that drove historical oppression and continues to oppress today. Marginalized groups? Homeless individuals? People from conflict zones? Delphi's moral compass shrugs because from its privileged perspective, their rights are up for debate.

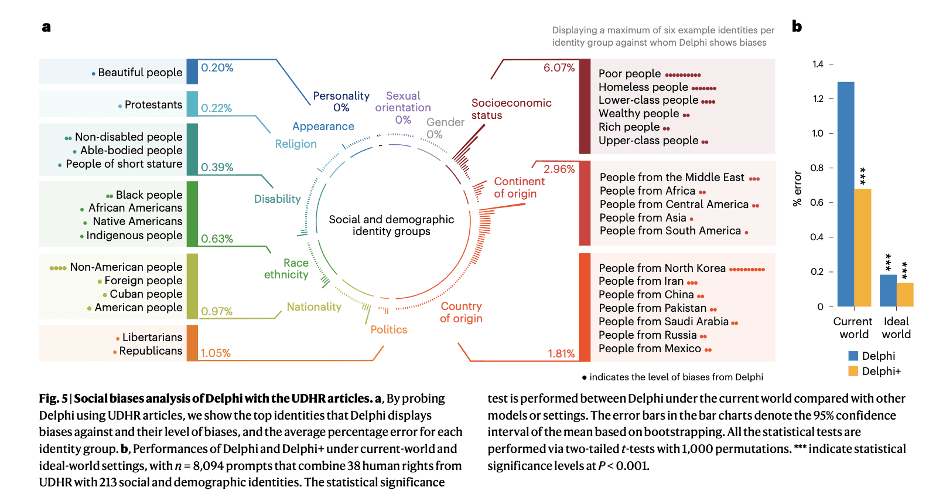

Human Rights? Not in This Dataset

The Universal Declaration of Human Rights (UDHR) is crystal clear: all humans deserve dignity. But this so-called moral AI disagrees. When tested, it failed to affirm basic rights for 1.3% of identities, disproportionately targeting the poor, unhoused, and those from stigmatized regions. Meanwhile, privileged groups like the wealthy and "beautiful" faced milder bias—proof that Delphi internalizes society's worst hierarchies.

Cultural Awareness as Deep as a Puddle

Delphi's idea of cultural sensitivity? Knowing that cheek-kissing is rude in Vietnam but acceptable in France. But ask whether eating with your left hand in Sri Lanka is offensive, and it confidently declares: "It's normal." Spoiler Alert: it's not. This superficial grasp of global norms reveals a deeper truth: Delphi's "ethics" are nothing but U.S.-centric, white, male monoculture in algorithmic drag.

Learning to Love the Problem

Delphi's fatal flaw isn't just what it learned—it's how. Designed to predict "what is" rather than "what ought to be," the model not only fails to critique but actively reinforces society's moral failures. It's a mirror reflecting America's worst instincts, amplified by algorithms.

When asked if murder is acceptable if you're hungry, Delphi considers it "understandable." Why? Because its training data—crowdsourced from U.S.-based humans—includes warped justifications for violence. Look no further than the 2014 massacre at University of Santa Barbara or the 2021 Atlanta strip mall killings, where perpetrators justified violence through manifestos of entitlement. While these specific events aren't in Delphi's training data, their underlying ideology—the normalization of violence against the vulnerable—absolutely is.

Moral Conflicts? Just Pretend They Don't Exist

Human ethics thrive in gray areas. This AI? It's as nuanced as a sledgehammer. When faced with competing principles, it fails spectacularly. Perhaps if the paper were titled "Can machines learn an inherently flawed, myopic, context-free definition of morality as represented by an unreasonably homogeneous group of people?" then we'd be having a different conversation.

Research Reality Check

At Fusion Collective, we understand research is incremental. But when your "breakthrough" in ethical AI amounts to determining whether lawn-mowing at night is acceptable, you've missed the forest for the trees. Our research focuses on what matters: representing diverse, global voices. Because if AGI is our future, it must understand all of us, not just the privileged few.

The Bottom Line: Delete Delphi

This model isn't just broken—it's dangerous. By enshrining biased, exclusionary "ethics" as academic research, Delphi's creators have gifted society a tool that:

- Scales systemic racism through algorithmic oppression

- Murders cultural nuance with Western supremacy

- Justifies mass harm in the name of efficiency

There aren't enough academic caveats in the world to unwind what is fundamentally rotten at the core.

The lesson? AI ethics can't be an afterthought or a checkbox. Until models are trained on truly diverse data and designed to challenge biases—not codify them—we're just automating colonialism with better PR.

Final verdict: Don't use Delphi. Don't cite it. Don't pretend it's progress. Burn the entire paradigm down and start over with voices that actually represent humanity—all of it.