Why AI Policy Feels Like a Sci-Fi Villain Origin Story

So apparently, we're all living in a sci-fi movie, except instead of chosen ones and epic soundtracks (i.e, Hans Zimmer, John Williams, Ennio Morricone), we got tech bros with apartheid nostalgia deciding our digital future.

I've spent over three decades in technology coupled being raised by human mood rings, never quite sure which version of the adults you'd encounter when you rounded the corner. So, I've learned to spot patterns. The current AI Action Plan? It's giving me serious Emperor Palpatine vibes:

"We need emergency powers to save democracy by... removing democratic oversight."

Let me break down why this feels less like innovation and more like a villain origin story with really good PR.

The Palpatine Playbook: Emergency Powers Edition

Remember when Palpatine convinced everyone he needed "emergency powers" to save democracy? Yeah, well meet the AI Action Plan: "We need to remove regulations to... checks notes... protect you from regulations!"

It's like asking the fox to guard the henhouse, except the fox went to Stanford and has a really compelling PowerPoint about disruption and the naturally gifted. The plan's first pillar literally promises to "Remove Red Tape and Onerous Regulation." Because apparently, when you're holding a technology that can reshape the world, the logical first step is to remove oversight.

This isn't innovation, it's institutional amnesia with venture capital backing.

The Force-Feed Economy: Fiber That Hallucinates

Here's my favorite part: Only 8% of people actually WANT to pay for AI, so what’s Big Tech's solution? FORCE-FEED IT TO EVERYONE.

It's like your relative who insists you need more fiber in your diet, except the fiber costs $20/month and occasionally hallucinates fried fatty foods and pound cake. Google's AI Overview, Microsoft's Copilot integration, AI features shoved into every app and service: you literally can't opt out anymore.

When was the last time you saw an innovation that "revolutionized everything" but had the consumer appeal of expired milk? The math simply isn't mathin’.

International Relations: How to Lose Friends and Alienate Countries

"But we're beating China!" they cry, while literally 55% of countries now view us LESS favorably than... drumroll... CHINA.

That's like losing a popularity contest to the asshat who believes they are so important they can take their very important business call regarding the upcoming merger, potential buyout on speaker in the quiet car. How do you even recover from that?

The 2025 Democracy Perception Index surveyed over 110,000 people from 100 countries. For the first time in the survey's history, America's net favorability plummeted from +20% to -5%, while China's rose from +5% to +14%. Even Canada's positive perceptions of the US dropped from 52% to just 19%.

When your diplomatic strategy results in the majority of the world viewing you less favorably than China, promising to "export American AI standards" becomes an exercise in wishful thinking. Who exactly is going to voluntarily adopt technology standards from a country they fundamentally distrust?

Environmental Racism Gets an AI Upgrade

Meanwhile, Elon's running unpermitted gas turbines (as of July 2, 5 were permitted) in predominantly Black neighborhoods for his AI supercomputer. Because nothing says "innovation" like giving people asthma so your robot can write better poetry while opining as Hitler.

The NAACP is suing.

The facts are damning: xAI installed 35 methane gas turbines near Boxtown, a Memphis community that already faces cancer risks four times the national average. The turbines emit nitrogen oxides, formaldehyde, and other hazardous chemicals in an area that already received an "F" grade for air quality for four of the last five years. This is environmental racism with a tech upgrade, and the AI Action Plan promises to accelerate it through "streamlined permitting" that explicitly rejects "radical climate dogma and bureaucratic red tape."

Huh?

Department of Education: Now You See It, Now You Need It for AI Training

Folks, I now present to you the crown jewel of government logic: simultaneously eliminating the Department of Education while betting our entire AI future on... wait for it, the Department of Education.

Here's the play-by-play of this masterclass in contradiction: This administration has already gutted the Department of Education's staff by nearly half and is actively working to shutter it completely. The Supreme Court just gave them the green light for these massive cuts.

But wait, there's more!

Now, the very same AI Action Plan that's supposed to revolutionize America extensively discusses how the Department of Education will "prioritize AI skill development as a core objective." You know, that department they're busy dismantling by sledgehammer like it's a piece of IKEA furniture they can't figure out.

The magic trick here is promising an "AI-skilled workforce" while systematically destroying the infrastructure that protects civil rights, promotes equity, and provides opportunities for marginalized communities, you know, precisely the people most vulnerable to biased AI systems.

It's governmental prestidigitation at its finest: Now you see it, now you don't, but somehow you still need it to save the future!

Come on, make it make sense.

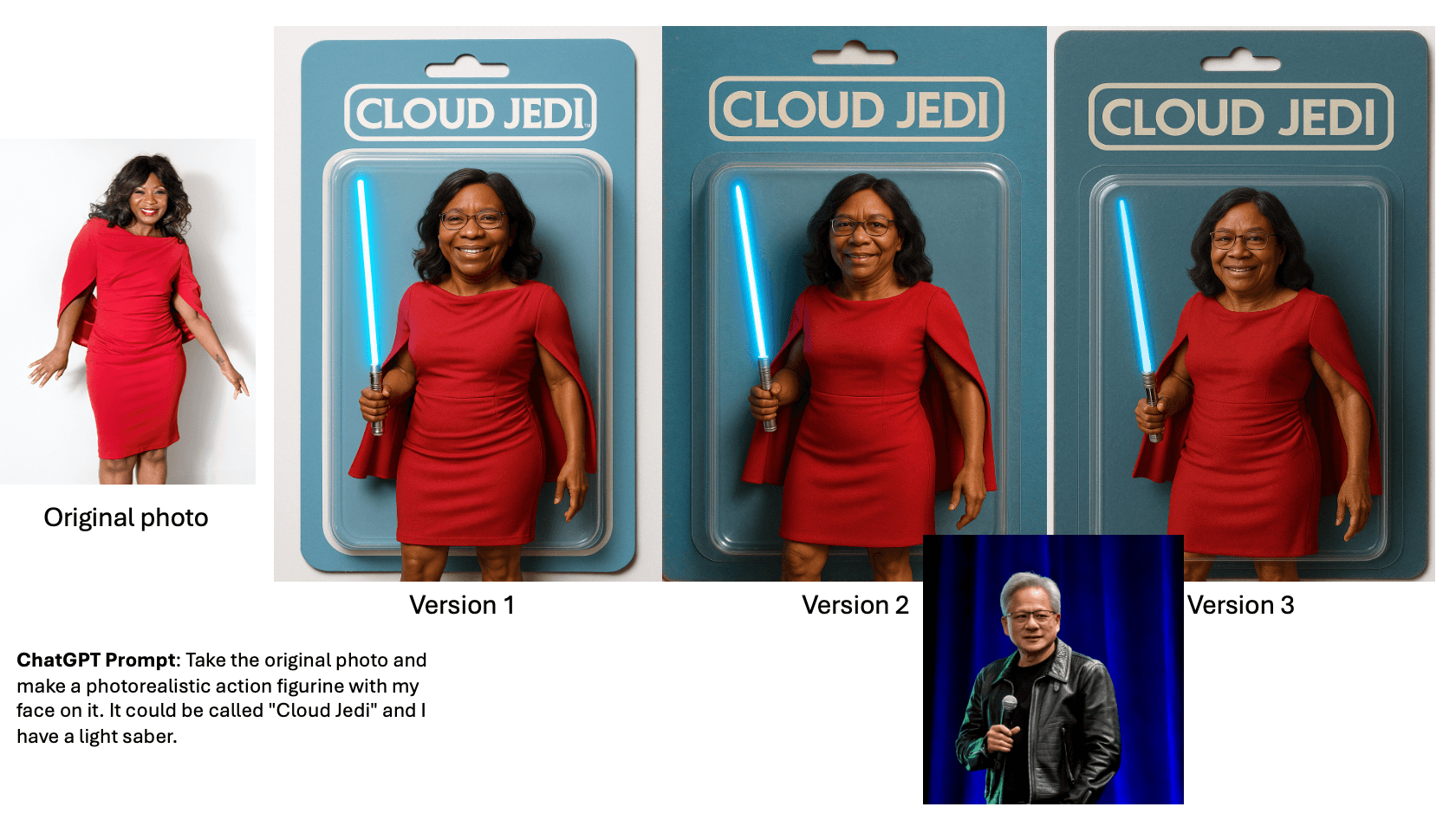

The Personal Reality Check: When AI Can't See You

The kicker? When I asked AI to make my action figure, it gave me Jensen Huang's face. If AI can't see a successful tech CEO properly, what makes us think it'll fairly judge your job application, healthcare needs, or mortgage

This wasn't a glitch, it was a feature of systems built without accountability to the communities they serve. AI systems don't fail randomly. They fail predictably, systematically, and spectacularly on people who don't look like the people who built them.

The 2008 Déjà Vu: Same Energy, Different Decade

Look, we've seen this movie before. In 2008, we trusted finance bros to self-regulate. That worked out GREAT.

The cost? Over $20 trillion in lost GDP, millions of lost homes, and a generation still recovering from economic devastation. The root cause wasn't complexity, it was simple: we removed regulations, trusted industries to police themselves, and assumed smart people with profit motives would naturally do the right thing.

They didn't. They couldn't. The incentives were wrong, all wrong.

Now we're trusting tech bros who literally wrote books called "The Diversity Myth" to build fair AI systems. The plan's intellectual DNA traces back to South African-born tech billionaires who have carried apartheid-era attitudes into American technology policy. David Sacks, the US AI and crypto czar, co-authored "The Diversity Myth" with Peter Thiel, criticizing multiculturalism and affirmative action. When the plan calls to eliminate any and all references to "Diversity, Equity, and Inclusion" from AI frameworks, this isn't policy preference, it's the systematic removal of protections designed to prevent AI from replicating apartheid-style discrimination.

But hey, what could possibly go wrong?

The Business Reality: When Strategic Reversals Become the Norm

Here's a statistic that should terrify every boardroom: 55% of business leaders who laid off workers for AI deployment now admit they made wrong decisions about those layoffs.

This isn't just a statistic, it's the largest documented strategic reversal in corporate AI adoption history. Meanwhile, 39% of business leaders report employees quitting as a direct result of AI implementation, and 35% acknowledge a lack of AI expertise as one of the biggest barriers to successful deployment.

Yet they're firing people anyway.

The "$20/month agent fantasy" where AI magically learns your business and replaces human workers? Pure vaporware. But companies keep chasing it because admitting they don't understand the technology would require actual accountability.

The Choice We're Making

The choice is simple: We can build AI that serves human dignity, or we can let people who think apartheid had some good ideas automate discrimination at scale.

Choose wisely.

What Real Leadership Looks Like

Real AI leadership means acknowledging that we're not in a sci-fi movie where technology automagically saves us. It means doing the hard work of:

- Building systems with intention, not just automation for automation's sake.

- Testing for bias before deployment, not after communities get harmed.

- Including diverse voices in development, not just in marketing materials.

- Honoring environmental justice, not poisoning poor communities for any reason (i.e., innovation or marginal efficiency gains etc.)

- Maintaining democratic oversight, not removing regulations because they're inconvenient.

- Building international trust through shared values, not technological dominance.

The Present Moment

We take care of the future best by taking care of the present moment.

- Right now, we have AI systems making biased decisions. This is a FACT.

- We have communities being poisoned by data centers. This is a FACT.

- We have educational infrastructure being destroyed while being promised workforce development. This is a FACT.

The present moment demands that we acknowledge these realities and build systems that protect human dignity from the start.

Your Move

The AI Action Plan has made its choice.

The architects have revealed their values through their policies, their partnerships, and their profits. When people show you who they are (through apartheid nostalgia, environmental racism, and open admissions they'll work with "bad people" for profit), believe them.

Now we have a choice to make.

The children in underfunded schools being steered away from professional careers by biased AI counselors can't wait for perfect policy. The vulnerable individuals receiving harmful advice from unregulated AI "therapists" can't wait for comprehensive frameworks. The communities being poisoned by unpermitted data centers can't wait for better environmental review.

But there's still time to get this right if we act with the moral clarity and political courage this moment demands.

The hobbits didn't give up when facing overwhelming darkness. They understood that the most powerful force against systems of oppression isn't grand gestures, its ordinary people choosing to do the right thing, consistently, even when it's hard.

Especially when it's hard.

What choice are you making?

The Death Star had great marketing too, but the Rebellion had something more powerful: the conviction that everyone deserves dignity and freedom.

Share your thoughts in the comments or reach out if you want to discuss how we can build AI systems that actually serve everyone.